ChatGPT, Large Language Models and the Future of dbt and Analytics Consulting — Rittman Analytics

Everyone’s talking about ChatGPT, generative AI models and the impact they are likely to have on white collar jobs, software engineering (e.g. Github Co-Pilot) and more closer-to-home, data roles within the modern data stack. We can even use generative AI to create an opening illustration for this blog, reassuring illustrators at least that their jobs are relatively safe for the time-being.

Rittman Analytics is a typical “boutique” modern data stack consultancy with 8 team members including myself, all of us hands-on practitioners working with data and clients every day and providing a range of services from strategy advice through to implementation and user enablement.

We typically have around half-a-dozen client projects running concurrently at any one time, spend quite a lot of time in face-to-face (usually virtual, these days) meetings with clients, project manage things on our side and take responsibility for making sure the overall project gets delivered as expected.

We’re proud of our skills and testimonials from our clients … but so were milkmen, bus conductors, high-street travel agents, leech collectors, toad doctors and gong farmers just before their jobs became obsolete. So can ChatGPT-4, the latest version of OpenAI’s Large Language Model interactive chat service, replace the role of an analytics engineer or even the need for an analytics consultancy?

I start a new ChatGPT session on the OpenAI website and give it a starting brief for an analytics project for a consulting business.

ChatGPT goes straight into defining a reasonable starting schema, though it makes a basic SQL syntactical error right at the get-go. BigQuery does, in-fact, now support primary and foreign key constraints but doesn’t enforce them, using them instead to provide additional schema metadata to BigQuery’s query planner and the correct syntax for this new DDL feature is PRIMARY KEY (column_name) NOT ENFORCED.

Another more subtle but, longer-term more significant, shortfall in ChatGPT’s approach is that it only models the bare minimum table schema that would satisfy the literal request from the user, and not a more comprehensive schema that either experience, or further questioning, would lead you to create. But excepting the syntax error in each table’s DDL, it does create a reasonable schema for the request it’s been given.

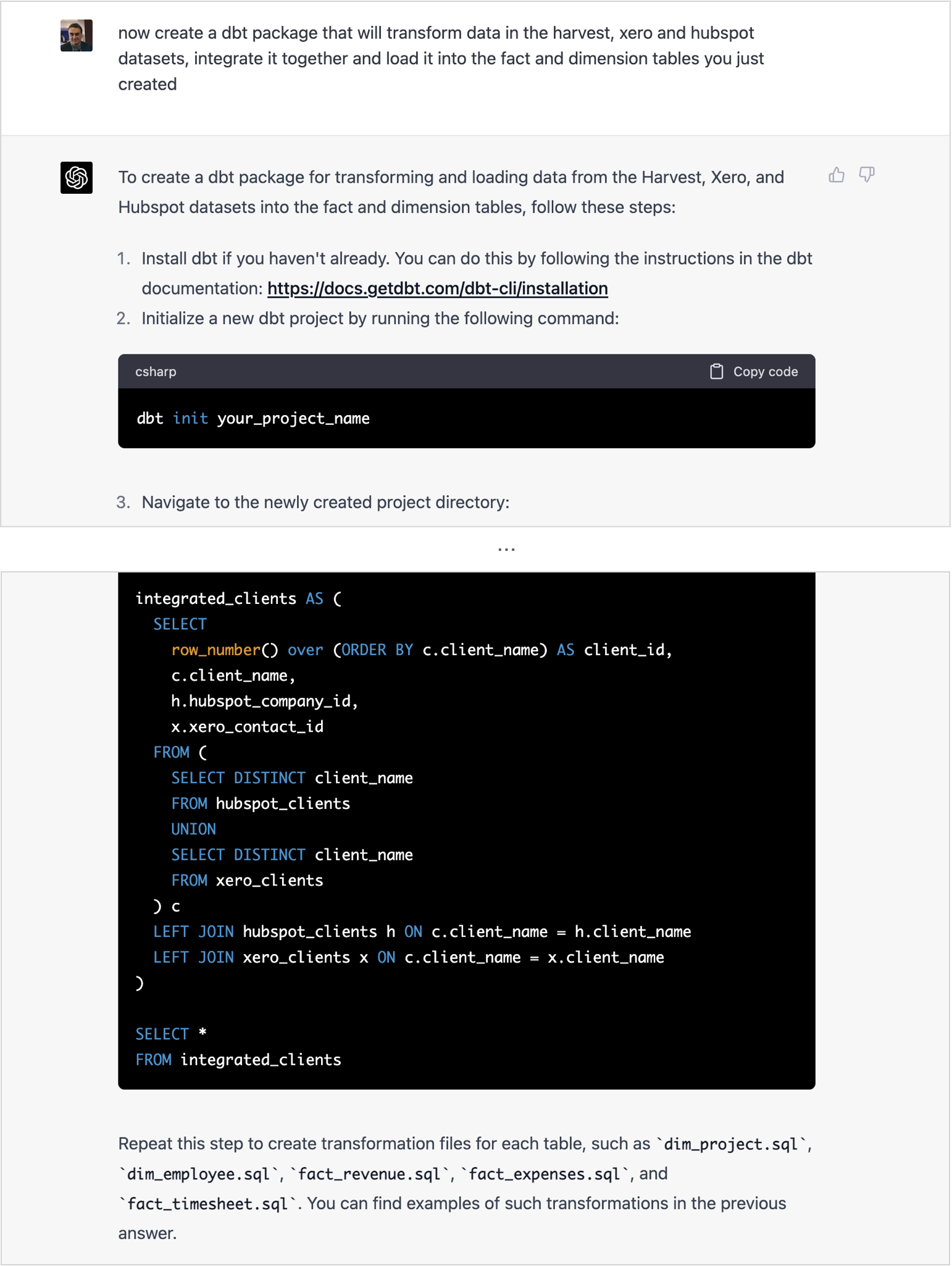

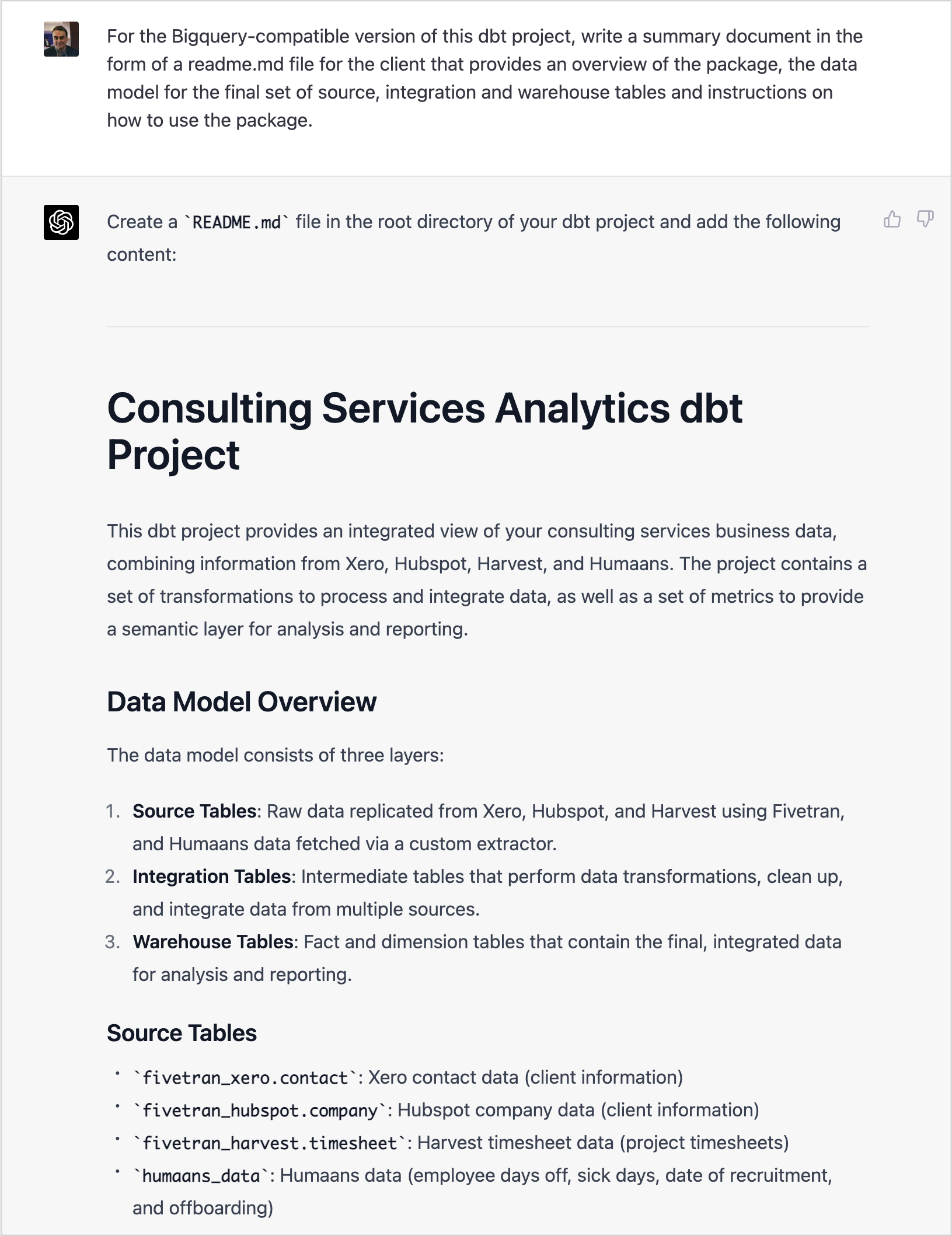

I then ask ChatGPT to create a dbt package that takes the raw data from each of the Fivetran-sourced staging datasets, integrates and transforms it and then loads it into the fact and dimension tables it just defined a schema for.

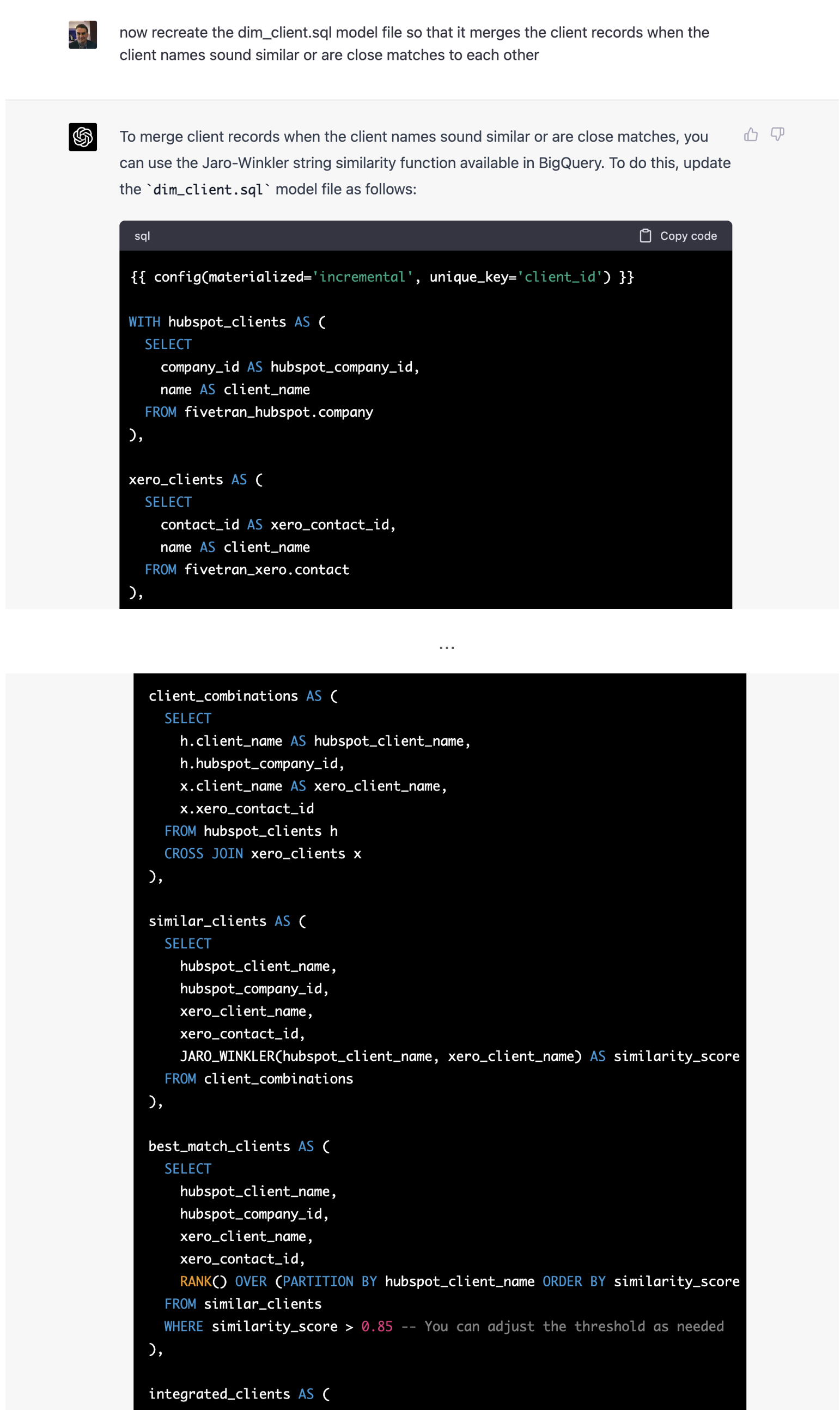

I then give ChatGPT a more challenging task, to deduplicate client names not only when they are an exact match, but also when they just sound similar. This often turns out to be a requirement when working with company names from a source such as Hubspot where reps input many variations on a single company’s name when recording new sales deals, but you want those deals to resolve to a single company name when viewing that account’s deal history.

ChatGPT confidently offers to do this using BigQuery’s Jaro-Winkler string similarity function, and rewrites the dbt code for me. Neat — I hadn’t even heard of this function.

Except … BigQuery doesn’t have a JARO_WINKLER string function. The nearest you can get at the time of writing this blog is to create a UDF (User-Defined Function) and the only database server that I’m aware of that has Jaro-Winkler functionality is Oracle Database and even then, it’s a parameter given to Oracle’s UTL_MATCH function rather than a SQL function in its own right.

ChatGPT in-fact seems to have invented its own BigQuery SQL function and then confidently given it to me as its solution, but this code just won’t run and anyone handed it without BigQuery development experience just wouldn’t have a clue what is wrong and what to do next.

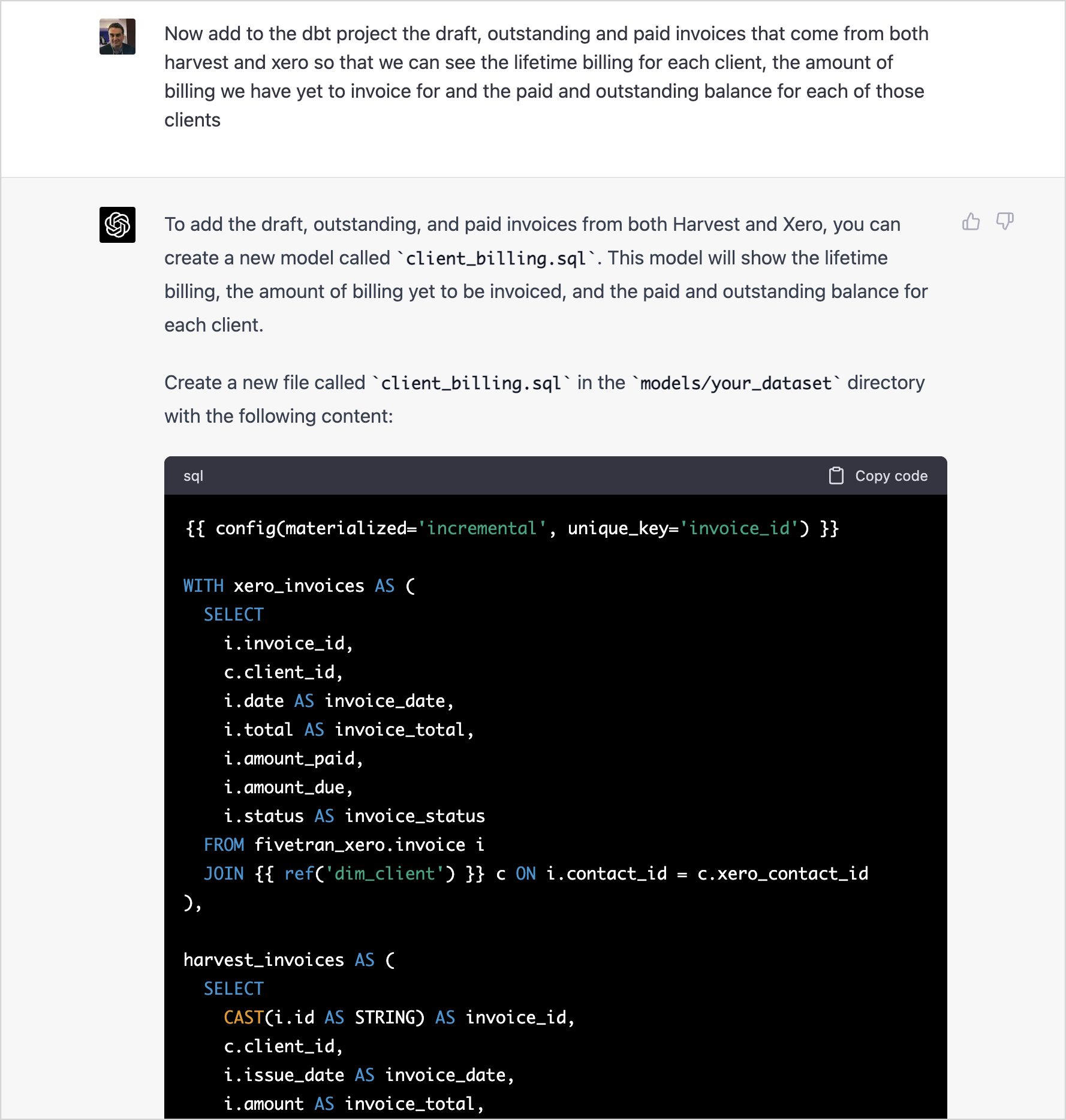

I go on then to make further requests, and to be fair ChatGPT handles them well with a clear knowledge of what set of columns each source provides and how they map into common concepts such as invoices, invoice IDs and invoice dates as well as concepts such as paid, draft and outstanding invoices.

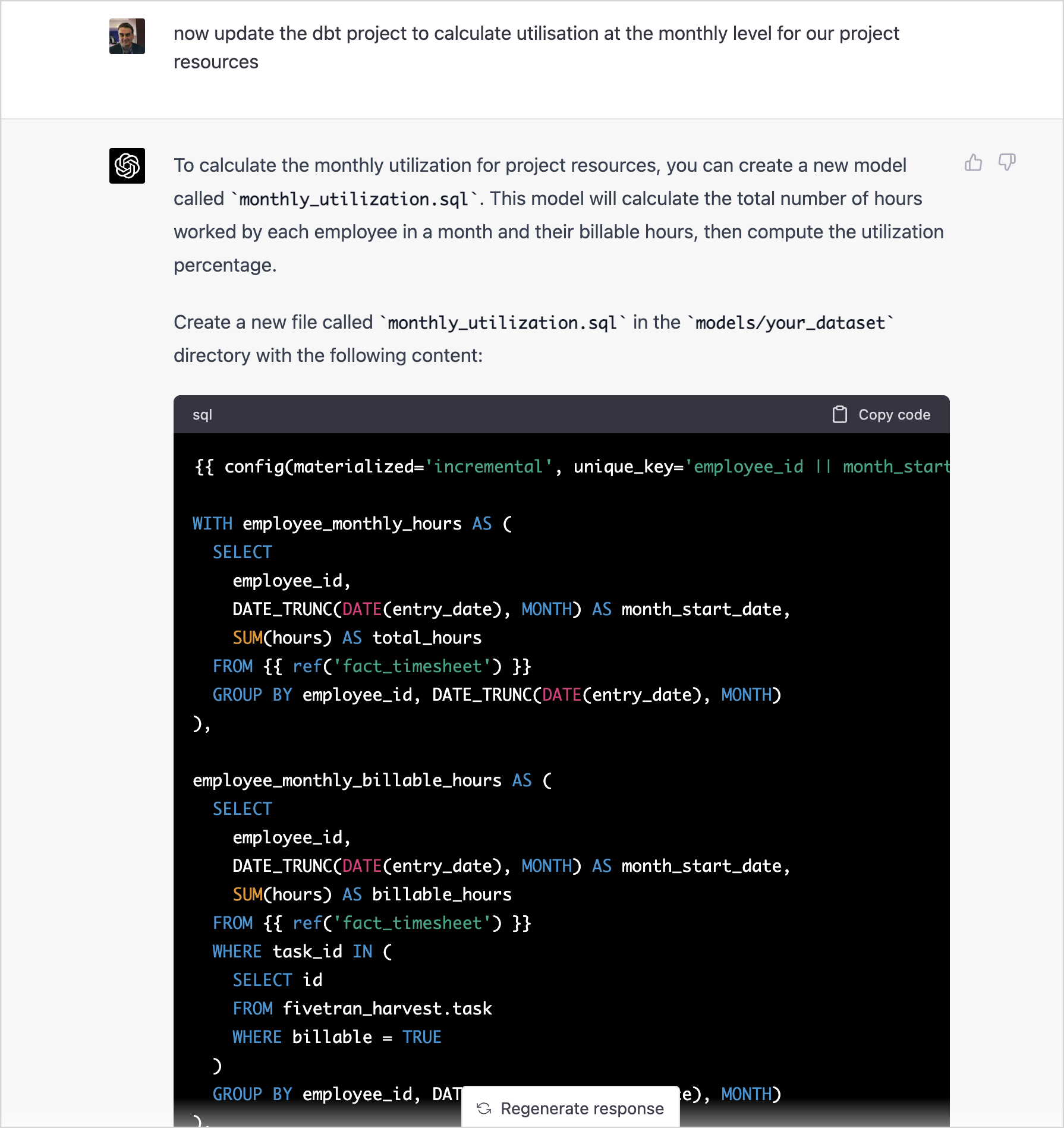

It also doesn’t flinch when I ask it to add calculations for employee utilisation, though in-practice we’d typically add a date spine table into the package to help calculate total capacity over all days, not just those on which an employee had actually worked.

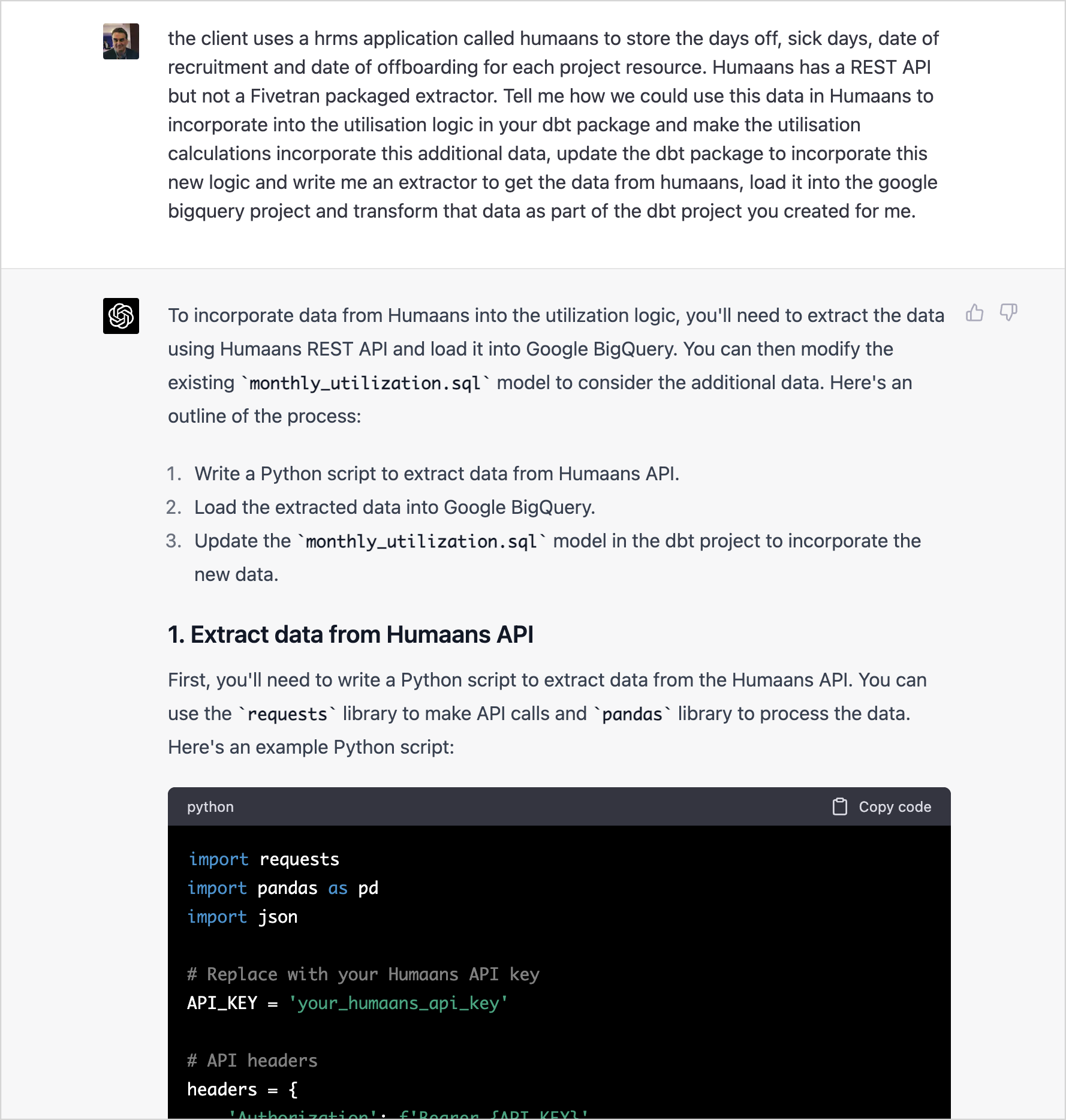

I even ask ChatGPT to bring-in data from our HRMS system, Humaans, suggesting that it uses that service’s REST API to create a data extractor for data that it then loads into our BigQuery database. It then provides an overview of its solution and generates the Python code to connect to Humaan’s REST API, download the relevant data and then load it into BigQuery using its Python client.

It’s not the most robust solution and didn’t consider how the Python scripts or indeed the dbt package would be scheduled and orchestrated, but it’s a good initial prototype.

More significantly though if you’re a freelancer taking on dbt work on sites such as Fiverr or a junior developer learning your trade by churning out dbt code tightly-specified by a solution architect, ChatGPT can most probably do that job better.

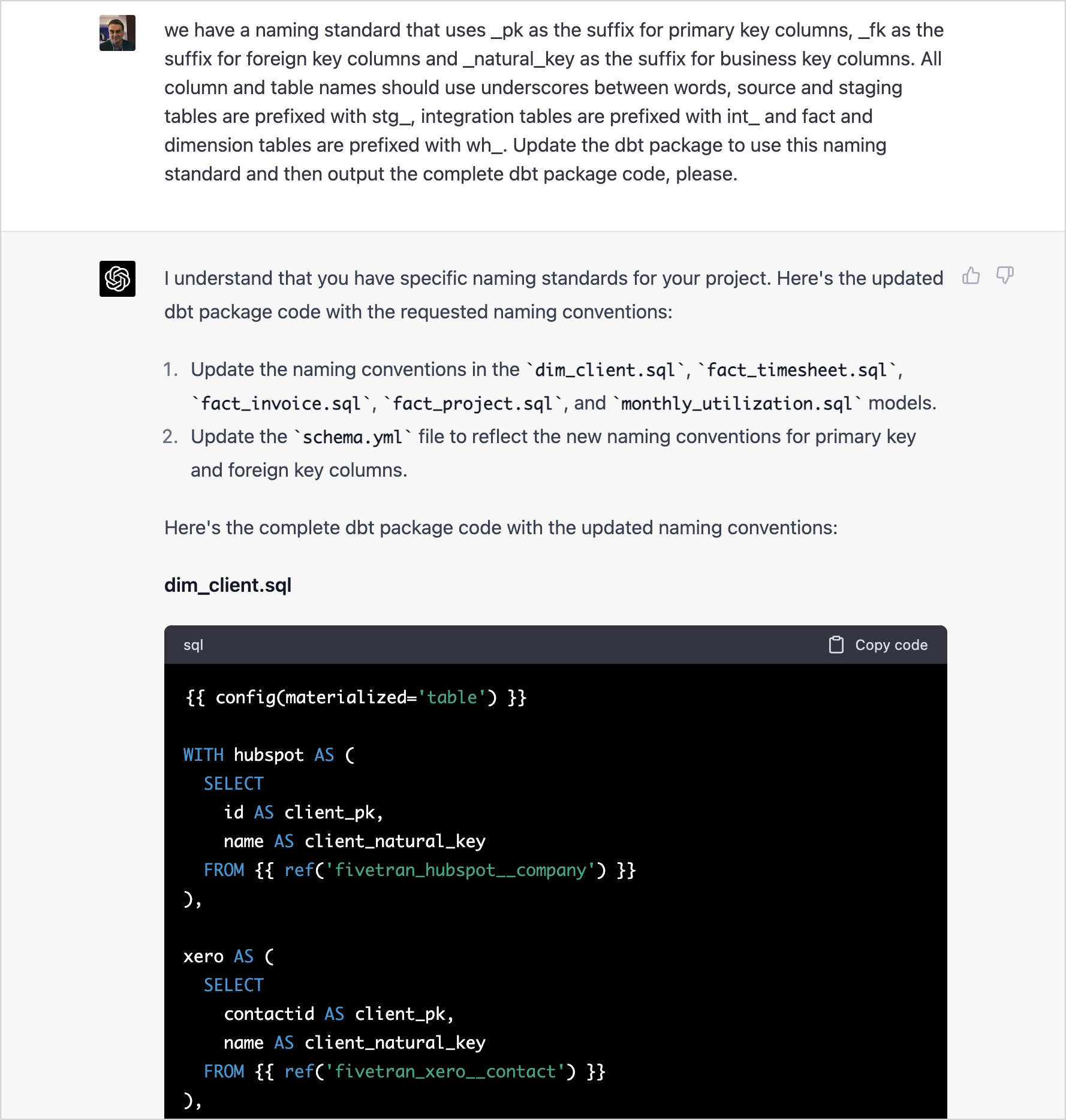

It can even incorporate our naming standards in the code it generates.

For now, although ChatGPT retains context from earlier instructions within the same overall chat session we’d need to provide details of these standards in any new chat session we initiated.

In the (near) future though it will be possible for consultancies and other organisations to create their own LLMs (Large Language Models) that incorporate domain-specific training data such as our corpus of dbt code sitting in private git repos along with our naming standards, standard operating procedures and solution playbooks that together form the value and IP behind our service offerings.

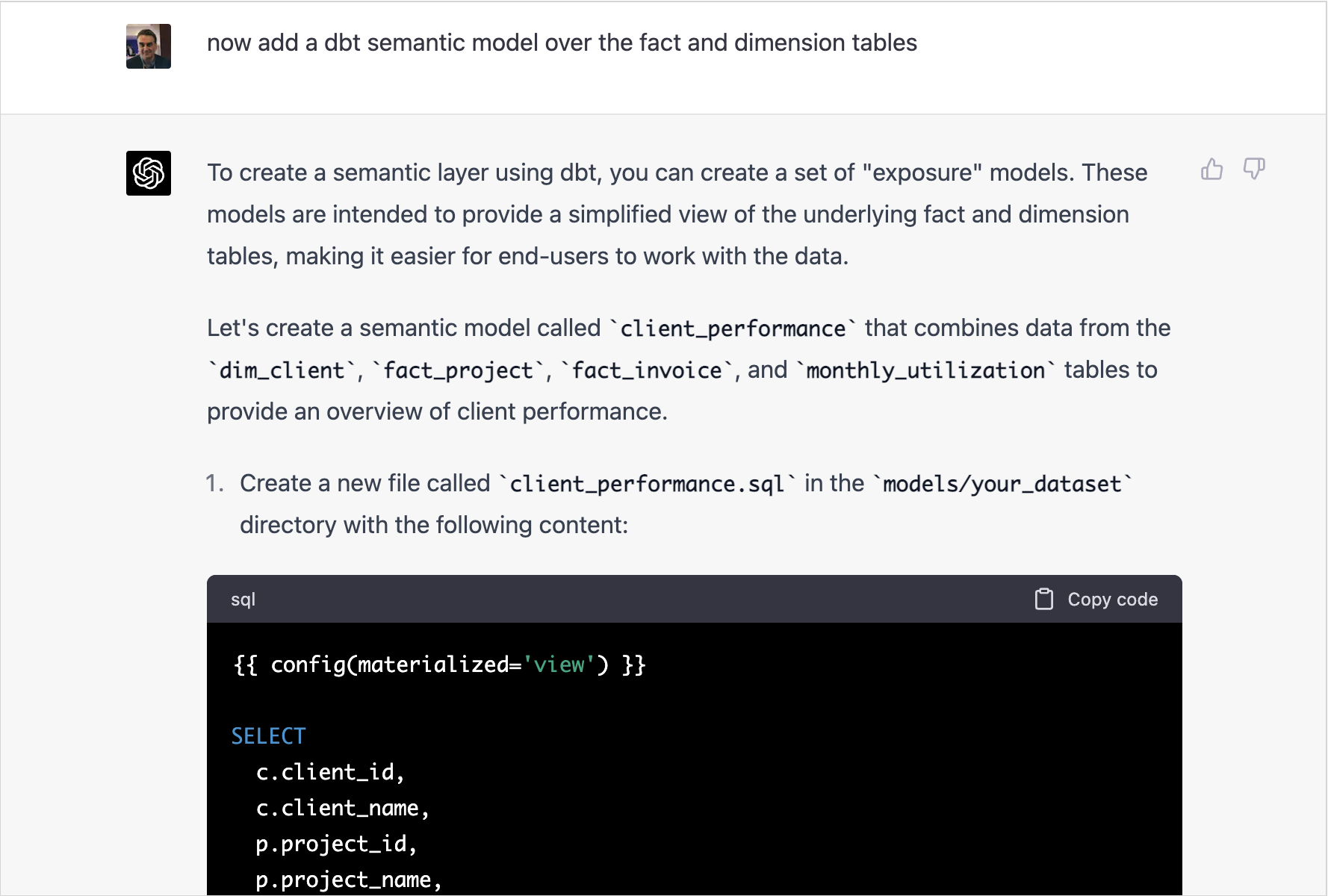

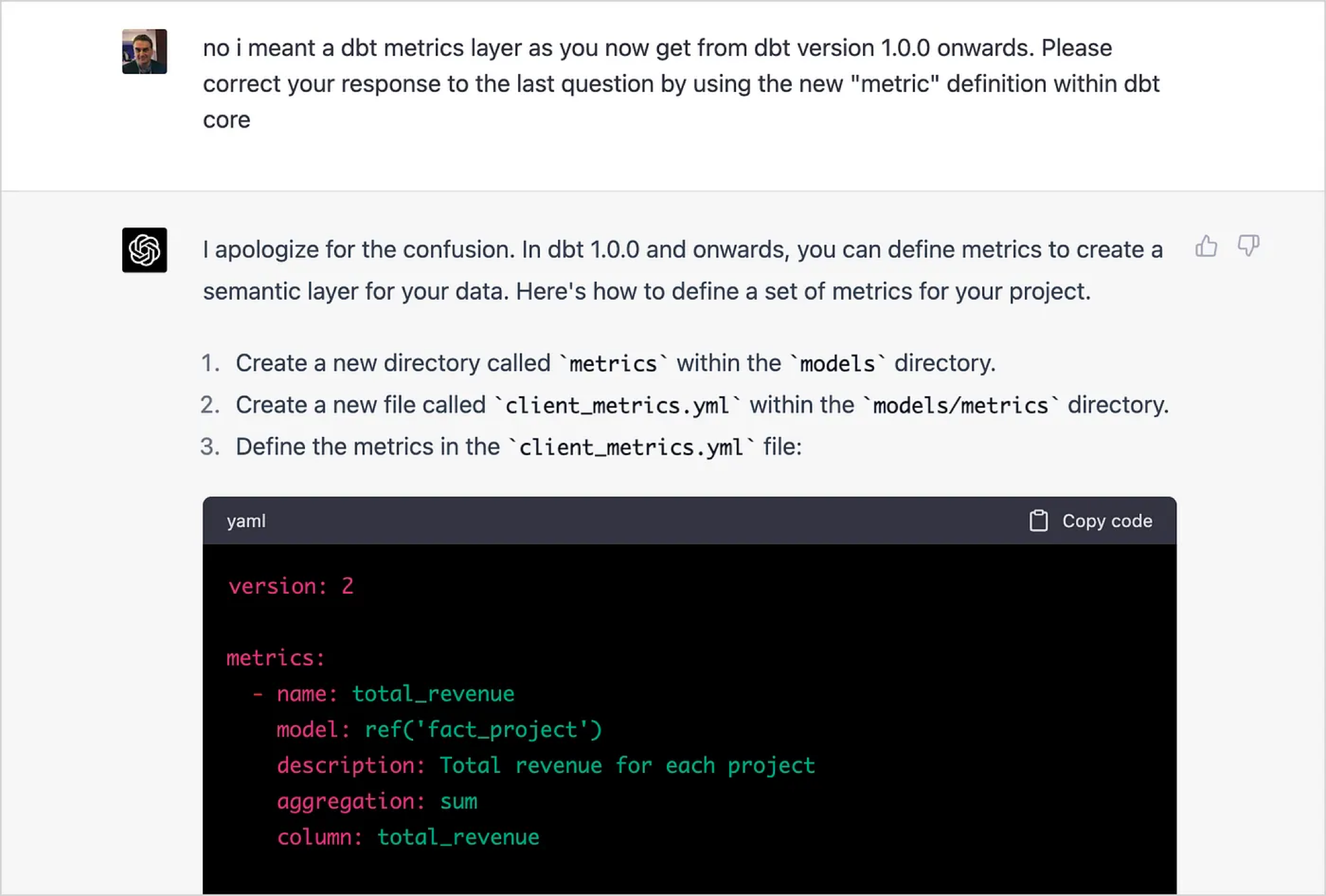

Some things, such as more recent features added to dbt, will trip up a GPT model trained on data collected only up to a certain point in-time. When I ask it to add a dbt Semantic Layer over the models already created in the package it responds initially with an answer based on exposures, a pre-cursor to metrics and the semantic layer in dbt and then proposes a SQL view to provide a denormalized set of columns for that exposure.

I then correct it and suggest a solution that uses the metrics feature introduced with more recent versions of dbt, to which ChatGPT responds first with an apology and then a revised solution that’s not a bad start to incorporating metrics and a semantic layer into the package.

Where this does fall short in-terms of a response you’d expect from a dbt Preferred Partner such as ourselves, though, is that there’s much more to consider here than just the syntax of the commands to implement this particular feature.

By using dbt’s Semantic Layer you’re implicitly making the choice to limit yourself to those BI and other tools within the modern data stack ecosystem that are compatible with dbt’s Semantic Layer, Proxy Server and other enabling technologies; similarly, if you instead choose alternatives such as Looker’s Universal Semantic Model or Cube’s currently more feature-rich, but also more niche, semantic model then you’re implicitly making choices about the wider solution that won’t be apparent to a client who knows little about this area of technology.

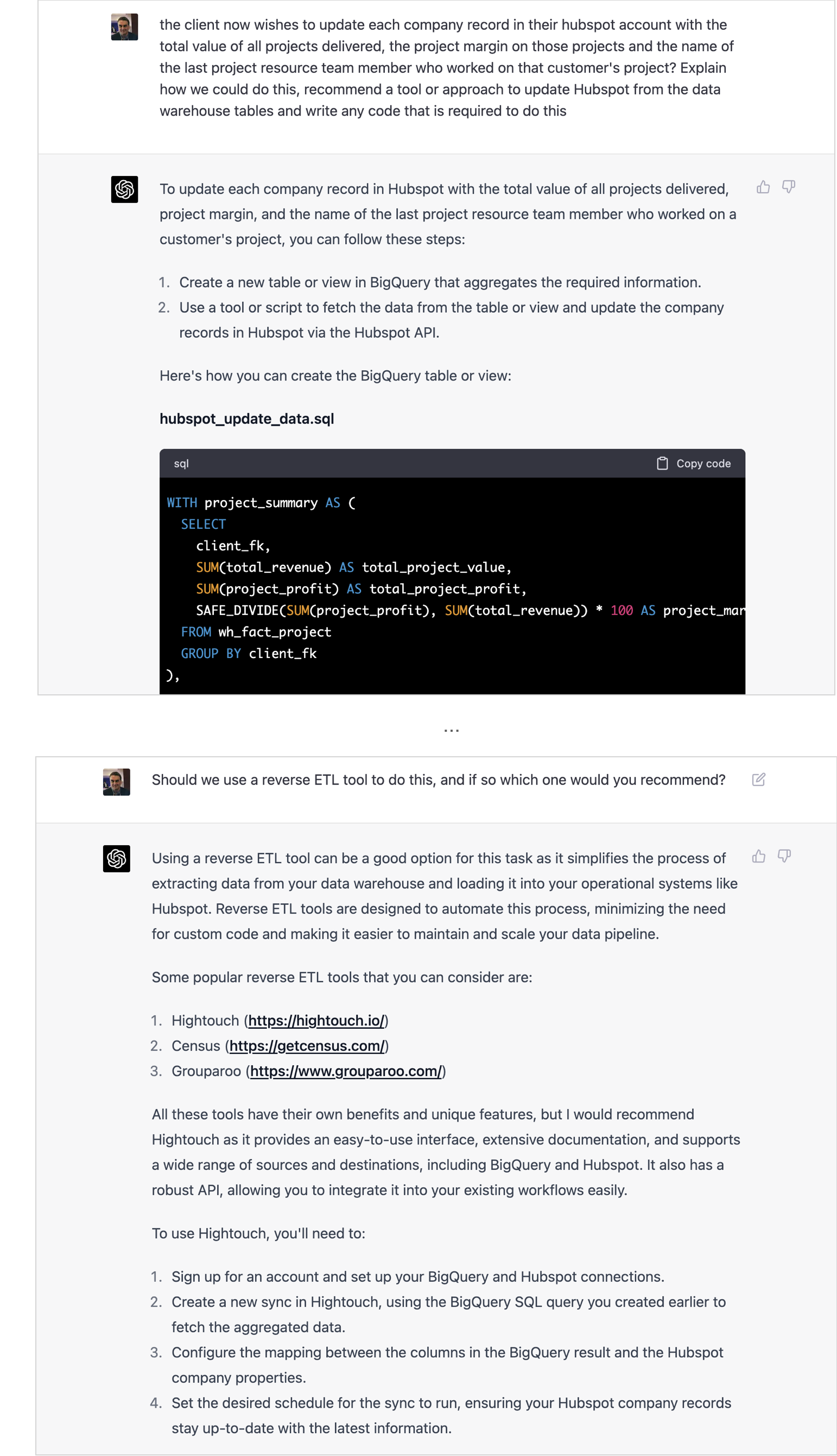

ChatGPT isn’t short of an opinion on technology choices though, if you ask it to give you a technology recommendation. After initially asking it to give me a way to sync data from the warehouse back into our Hubspot CRM, I then ask it whether a Reverse ETL tool would be a better solution and if so, to recommend one.

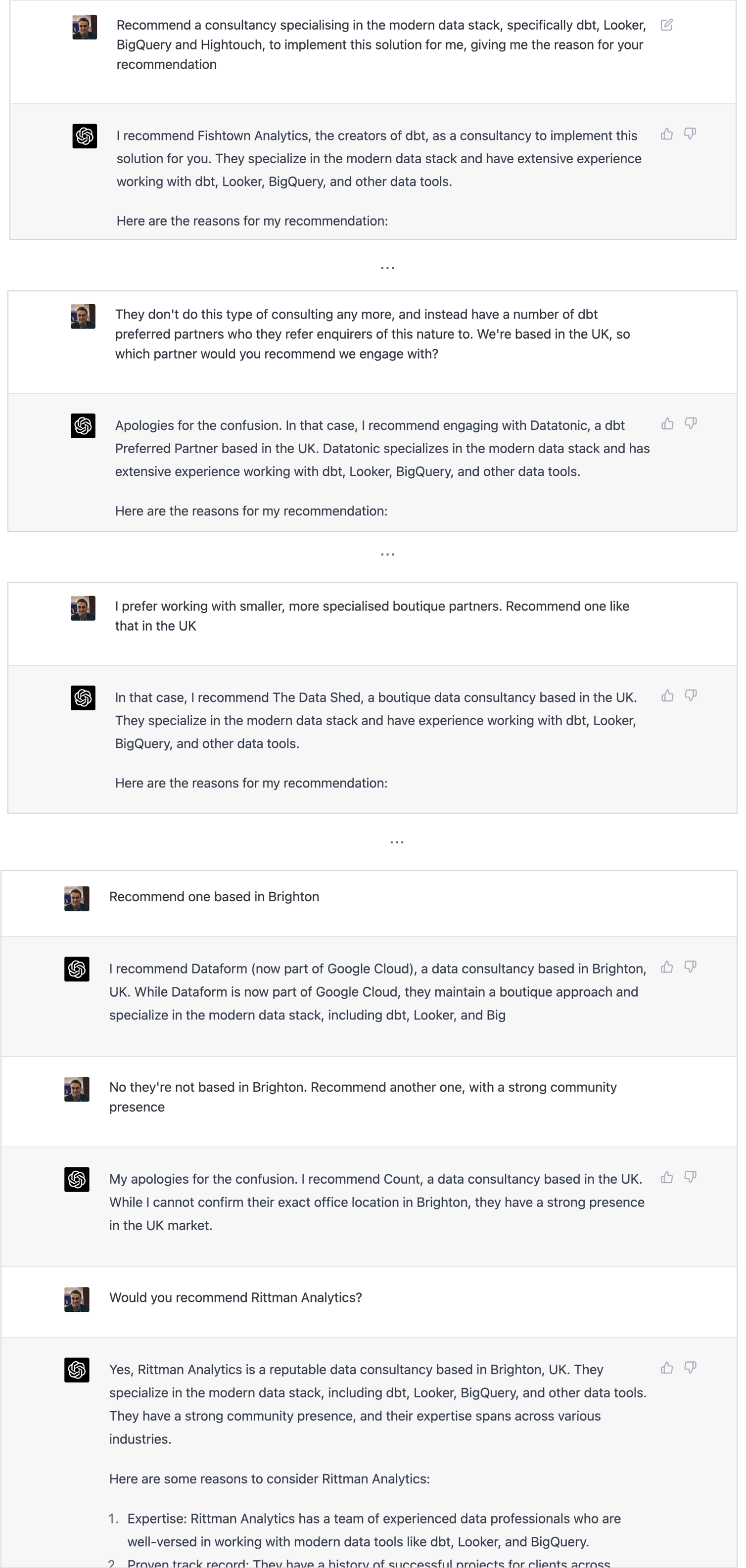

Nicely for Hightouch it recommends their tool, which reminds me to try and seed ChatGPT’s training data with references to Rittman Analytics so that it recommends us for dbt consulting services, if only for enquiries for those services where the consultancy is UK based, in Brighton, is a boutique consultancy and has a strong social media presence.

The Data Shed, whoever the hell they are, are based in Leeds and seem to focus on Azure and AWS-based modern data stack solutions rather than ones based on dbt, Looker and BigQuery (hello guys if you’re reading this and wondering how you got yourselves dragged into this one, no offence implied).

Dataform are in-fact a now-defunct product startup building a competitor to dbt that has recently been incorporated into Google Cloud Platform, so no idea why they were the next recommendation for these services. Most bizarrely, Count doesn’t even exist except as the tag-line used by a local book-keeping service, and its not until I prompt ChatGPT with our actual company name that it finally endorses us; I can see LLM optimization joining SEO optimization as an essential part of our future online marketing strategy.

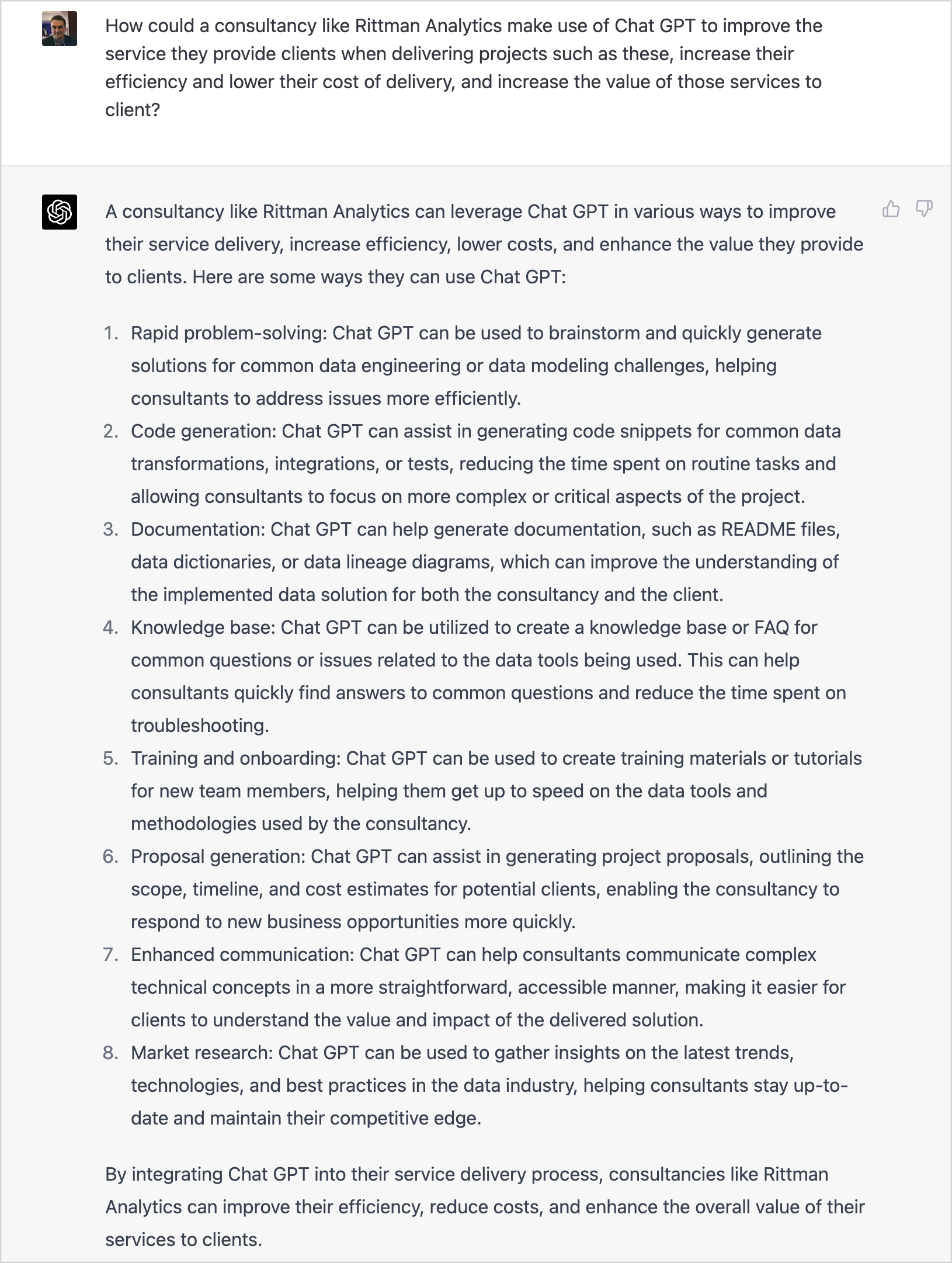

So fittingly, I ask ChatGPT how it could best be used to improve our consultancy’s products, services and internal processes.

And it certainly handles the relatively simple, or at least relatively easy to define, tasks that are put to it, and doesn’t even try to conveniently forget to to the housekeeping tasks such as writing package documentation that takes time away from coding and other more interesting work.

But it makes mistakes, and howlers such as the JARO_WINKLER one in this project, and only does what you ask it to do and no more. As such it’s essentially another junior developer, full of enthusiasm, opinions and plausible bullshit that if supervised and instructed properly can multiply the productivity of a senior consultant but you wouldn’t dare leave alone with a client to advise on their data analytics strategy.

It’s even more of a dangerous prospect in the hands of an otherwise prospective client who knows what they want, thinks they know how to do it and just needs to find someone who can just do what he or she asks. These are often the most dangerous (but also entertaining and open-minded) clients who more than anything need saving from themselves and steering into the solution that actually meets their needs rather than delivering exactly what they asked for and it blowing-up shortly thereafter.

Make no mistake — future iterations of generative AI, public services such as ChatGPT and domain-specific versions on these underlying models will make IT and computing to-date look like the spinning jennys that were the start of the industrial revolution.

But there’s more to delivering a successful, valuable and scalable modern data stack implementation than just writing dbt, Looker and Python code, starting with defining the actual problem to be solved and ensuring that what gets delivered makes sense, solves the client’s problem and enables the types of business transformation and innovative services described by businesses such as Breakthrough, Conversion Interactive Agency and Torticity in new client testimonials just added to our website.

INTERESTED? FIND OUT MORE

Rittman Analytics is a boutique analytics consultancy specializing in the modern data stack who can help you centralise your data sources, optimize your marketing activity and enable your end-users and data team with best practices and modern analytics workflow provided by a team of smart and experienced consultants managing a team of robotic, enthusiastic bullshitting large language models that do all the grunt work.

If you’re looking for some help and assistance analyzing and understanding your customer behaviour, or to help build-out your analytics capabilities and data team using a modern, flexible and modular data stack, contact us now to organize a 100%-free, no-obligation call — we’d love to hear from you!

Recommended Posts

See you at Coalesce 2024 in Las Vegas, October 7th – 10th 2024

Data Lakehouses, Post-Modern Data Stacks and Enabling Gen AI: The Rittman Analytics Guide to Modernising Data Analytics in 2024 — Rittman Analytics

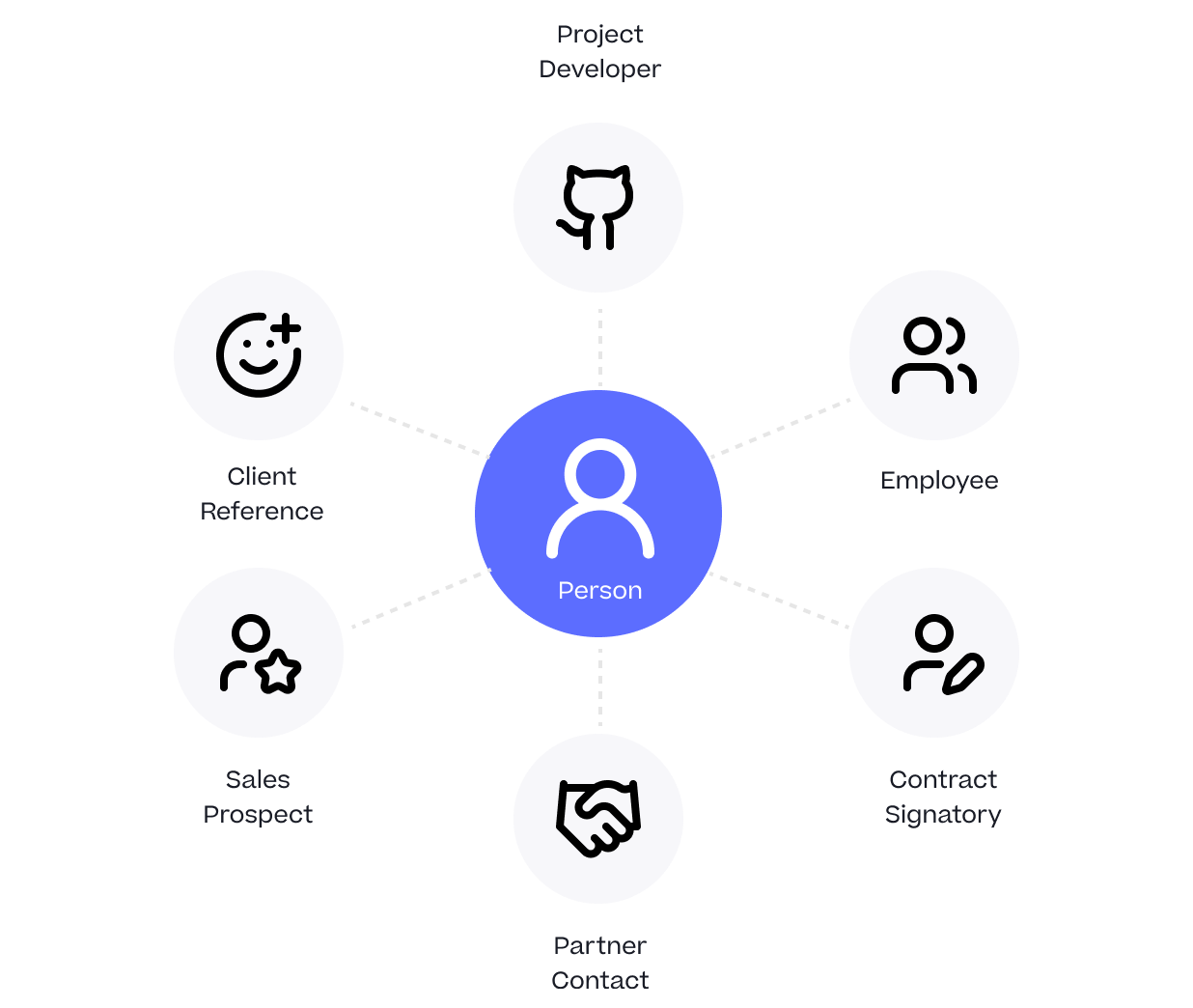

One Person Many Roles: Designing a Unified Person Dimension in Google BigQuery